Docs

Concepts

Concepts

At the core of llm-ui is useLLMOutput. This hook takes a single chat response from an LLM and breaks it into blocks.

Blocks

useLLMOutput takes blocks and fallbackBlock as parameters.

blocksis an array of block configurations thatuseLLMOutputattempts to match against the LLM output.fallbackBlockis used for parts of the chat response when no other block matches.

Example

Language model markdown output:

## Python

```python

print('Hello llm-ui!')

```

## Typescript

```typescript

console.log('Hello llm-ui!');

```

If we pass the following parameters to useLLMOutput:

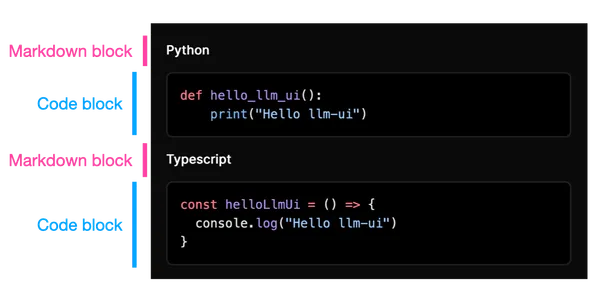

blocks: [codeBlock]which matches code blocks starting with```.fallbackBlock: markdownBlockwhich assumes anything else is markdown .

useLLMOutput will break the chat response into code and markdown blocks.

llm-ui breaks the example into blocks:

Throtting

useLLMOutput also takes throttle as an argument. This function allows useLLMOutput to lag behind the actual LLM output.

Here is an example of llm-ui’s throttling in action:

# H1

Hi Docs

```typescript

console.log('hello')

```0.4x

The disadvantage of throttling is that the llm output is delayed in reaching the user.

The benefits of throttling:

- llm-ui can smooth out pauses in the LLM’s streamed output.

- Blocks can hide ‘non-user’ characters from user (e.g.

##in a markdown header).